Humanity's Turning Point: Steering Our Future Through Inner (AI) Alignment - FL # 2

Dusk or Dawn - The Impending Artificial Superintelligence Revolution and The Fate of Humans

Hi Fellow Explorers,

I hope you’re having a wonderful week and are excited about the coming weekend!

Today marks the day I am expanding the scope of this newsletter which I have been excited to do for a long time now! TheCryptoFrontier Newsletter is now The Frontier Letter, and you can see what I plan to discuss on my about page!

The potential emergence of A.I superintelligence beckons us to ponder a captivating and vital question: Can we truly prepare for a future with AI if we have not first embarked on a journey of self-discovery and alignment? While no one possess all the answers, the exploration of diet, well-being, and self-alignment offers a compelling foundation for navigating this uncharted territory

If you aren’t already subscribed - please consider joining the expedition with the 12 other subscribers and myself!

Join the early adopter crew ;)

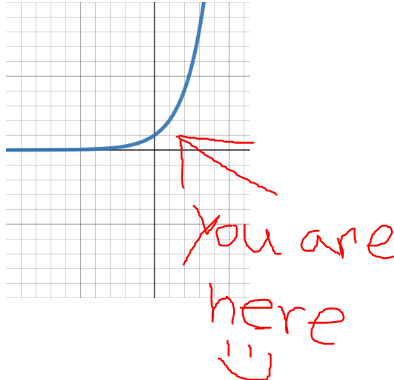

Humanities' Largest Inflection Point

Right now, as we speak, potentially the most important event in the 300,000 years of the human species is playing out.

The release of ChatGPT has begun to spark the conversation of creating an intelligence far greater than our own. We are approaching the age in which the next step of evolution emerges right before our eyes. Listening to Lex Fridman's conversations with Max Tegmark and Eliezer Yudkowsky has made two things abundantly apparent to me.

I will highly likely see the next stage of evolution with the emergence of a species far more intelligent than humans.

There's a higher chance than not that this superintelligence exterminates the human species as we know it.

If you're not caught up on this, I HIGHLY recommend starting with the Max Tegmark interview; he did an exceptional job at making these concepts accessible.

AI improvement seems like we are at the beginning of an exponential curve. It seems like it was only yesterday that OpenAI released a research paper indicating 80% of jobs have some impact from ChatGPT, and it WAS just two days ago that IBM announced that they would be pausing hiring for jobs they believe an AI could do!

The top of that chart is the aforementioned superintelligence.

The argument goes something like this:

ChatGPT has revealed that artificial intelligence is on the cusp of becoming Artificial General Intelligence (AGI), which has varying definitions but is a hypothetical intelligent agent which can learn to replicate any human intellectual task that human beings or other animals can do. When AGI emerges, the intelligent agents can recursively iterate on themselves in such a quick fashion that there will be a point in time called "The Singularity," in which the intelligence of the AI becomes so advance that humans are unable to comprehend its level of intelligence and predicting the future of civilization becomes unimaginable and impossible.

If that doesn't make much sense, think of this artificial superintelligence's intelligence about the difference between humans and Apes. The difference between these two is a good comparison when trying to picture the differences between us and the AGI once the singularity sets in.

I once heard from Joe Rogan that the Chimps and Monkeys have entered the stone age. I don't know if that's true, but humans' closest ancestors entered the stone age roughly 2.5 million years ago. Now imagine that the next stage of evolution (Singularity AGI) has a similar order of magnitude difference in intelligence.

The Problem?

The problem with superior intelligence can be demonstrated by what Eliezer coined the paperclip problem. Suppose we don't properly align artificial intelligence to favor humanity. In that case, you can follow this thought experiment: Suppose we have an AGI whose utility function is to maximize paperclips in the world. The AGI will do all it can within its level of intelligence to do this until it reaches its capacity. Then it will iterate on itself to become more intelligent to find more ways to maximize paperclips until the AGI modifies all physical reality to facilitate the production of paperclips and can learn how to mold human atoms into paperclips. This thought experiment shows that not only do we need to ensure that we don't create malevolent AGI, but we also must make sure we align AI with human values. In the case of the paperclips, even a seemingly benign AGI would eventually wipe out humanity to maximize the paperclips in the world.

The suggested way to solve this problem is known as artificial intelligence alignment. AI alignment is the process of engineering the AI to steer civilization forward with human intention and goals forefront.

There is no conversation at this time more paramount than this one. Today, I will lay out an argument that the way to properly align AI starts with YOU! Even if AI doesn't come for us, this piece is certainly something you can learn from which was inspired from two seemingly unrelated people:

I love humans, this world, my family, and my friends, and I love thinking about the world's future and envisioning what I can do to help bring more love, meaning, and joy to those people. If we don't solve this crucial alignment problem, we can kiss all of that we love goodbye.

A Note on AI Alignment

Before I dive deeply into this piece, I want to make it clear this isn't an essay on the technical implementation of AI alignment.

AI alignment is notoriously tricky; you can check out a brief reason here.

The TLDR is

"AI Alignment is, effectively, a security problem. How do you secure against an adversary that is much smarter than you?”

This has been on my mind since the release of ChatGPT. No, I am not an AI expert. Still, I am fascinated by innovative technology and always attempt to understand the terrifying and beautiful parts, as most novelty comes with both. Discovering the truth can help us move forward with the beautiful parts and burn off the destructive ones.

Enter Bryan Johnson

Goal Alignment Problem and Bryan Johnson's Start to The Solution

The primary reason for this piece was because everything had seemed to click for me this week by a truly unsuspecting person.

This past week, I had a terrible inflammatory response (I have a strict diet that, if I stray from it, causes me to fall off a cliff); I didn't stray much (it was a new pea protein that I now discovered I am heavily intolerant to). Given my search history to attempt to solve this malaise, I believe I was recommended the following video:

Bryan Johnson is an entrepreneur and sold his most prominent company, Braintree, for $800 million to Paypal in late 2013. As most clickbait headlines about Bryan claim, he has spent $2,000,000 a year to try and stop the aging process. It's interesting to watch the video of him walking through his daily process on his youtube channel. The results are impressive and compelling, especially considering he scored in the optimal range on every biomarker, AND he has slowed his aging damage to that of a 10-year-old.

In essence, he built a team of people to measure data points on every single organ in his body and used a plethora of data to find the perfect diet, exercise, and supplement combination optimal for anti-aging. The diet + supplements cost roughly $2,000 a month, far less than the $2,000,000 a year. You can see the entire plan because Bryan has open-sourced it all, which he calls Blueprint.

"Okay so what the hell does this have to do with AI and the potential destruction of the human species Dom, what is this diet stuff and anti-aging all about?"

Glad you asked because I was looking for a smooth transition :)

Things started to click when I read on his website what the fundamental philosophy underpinning Blueprint was: Zeroism.

The idea, as he describes it, is:

"The enemy is Entropy. The path is Goal Alignment via building your Autonomous Self; enabling compounded rates of progress to bravely explore the Zeroth Principle Future and play infinite games."

You can see the birth of this philosophy in an essay he wrote called "My Goal Alignment Problem.”

"Interesting to me, even before we get to considering human/AI alignment, it is more closely examining the mess of competing objectives within each of us. After all, without a stable foundational set of goals within ourselves, how can we build the next layer of alignment?"

To attempt and restate his philosophy: Bryan believes that because we are entering an era in which levels of intelligence will exceed ours by orders of magnitude so exponentially great, there will be "zeroth principle" discoveries almost daily. To ensure that the AI is properly aligned with humans, humans must properly align themselves in ways that maximize the biological process and automate them so the self can focus on new frontiers of exploration. We must first learn how to align ourselves properly to solve the problem.

Zeroth principle discoveries are discovering things we do not yet know about, such as Einstein's discovery of general relativity.

This may be slightly hard to grasp, so here's a great example Eliezer gave in his interview with Lex that went something like this:

Imagine going back in time 300 years and dropping an Air conditioning unit in a home. The 1723 humans wouldn't have the capacity to understand the inner workings of the AC unit due to the undiscovered physics that makes those mechanisms work, but they would feel cold air coming.

Imagine that happening… DAILY!!

For all we know, there may be a way to turn shit into gold, but there is some undiscovered aspect of physical reality that we don't know yet which would help us build the mechanisms to turn our toilets into gold-producing machines.

Zeroism seems like a philosophy in which humans fully align within themselves to sustain a zero-predictability future... and, dare I say... be in a physical and mental state of mind that gives the AI good reason to keep us around…

This philosophy does teeter on potential dystopian aspects that I will explore in future newsletters.

His approach did ring SO TRUE for me - start with your health, and unforeseen benefits are downstream. I have also adopted the principle of automating most things away in my life that I don't find as meaningful to open up time for worthwhile pursuits, and one quote that Bryan really espouses and is even posted as his Twitter header is as follows:

Humans must align AI in a way that maximally benefits us if we want to survive. The argument is something like this: we must properly align the self and subsequently align with each other to properly align the AI or to properly align along with the future AI envisions.

So…. Does it start with food?

Alignment Within Yourself - Does It Start With Food?

Eating a healthy diet certainly can improve your life substantially. Making parts of your day more autonomous opens up a world of possibilities, which is one of the many reasons I love being on the Frontier: Innovations provide humans with more free time to use for enjoyment or increased productivity.

In my life, I have chosen to automate things with a fundamental driving value at the forefront of that decision. Typically to routinize effectively for whatever mission I have set in that given domain. As Bryan puts it, he has fired himself in making his food decisions and has allowed the data to take the steering wheel of what guides his choices.

That said, I don't think starting with a complete revamp of diet and exercise is the place to start. I think the place to start is wherever you need to start!

A good answer to the question "where do I start" when approaching any problem is "somewhere." So it certainly is a place to start, but I wonder if there's a more accessible place to start.

In fact, I know there is an easier place to start, and this principle I derived from listening to Jordan Peterson over the past 7 years.

Sit on the side of your bed, and ask yourself, "what horribly stupid things am I doing, and how can I fix them." From there, you start with the smallest and easiest problem to solve.

The idea that everyone will move lock-step to an optimally engineered diet is wrong, but the idea that everyone can get there eventually is right.

I don't think that figuring out alignment starts by engineering your diet to an optimal place for your health. I think it will start for each person in an entirely different place, but I do think that if you follow this path, you eventually transcend to a place where you ask, "What should I really be eating?"

I don't know the correct answer, but I think starting with the smallest unit of problem in your life will get you to bigger and bigger problems, and this will lead to transcending the self to a place in which we can properly orient ourselves, and attempt to orient ourselves with each other.

Is the Blueprint Diet Worth a Try?

The answer to this question is, of course, up to you. I think that Bryan has taken it to an extreme.

I understand the philosophy and agree with moving toward the autonomous self. However, this is not practical for every single person. I have the resources necessary to do it, but it would require a fair amount of preparation and money.

I have not publicly spoken about my diet or exercise program, but interestingly enough, I'm already on a Whole Food Plant-based diet and eat quite similarly to what Bryan has laid out. I also am doing ATG's workout program, which Bryan seems to include heavily in his exercise routine. Moving from what I do now to test out Bryan's routine isn't too much of a hurdle, and even then, it isn’t as easy as 1,2,3.

I have gone to relatively great lengths to find ways to automate things in my life to give my brain the capacity to think about more important things. Running on autopilot for certain things opens up time for other, more important things.

When I automate processes in my life, there is one quote that almost ALWAYS rings in my ears before I do so:

"I say unto you: one must still have chaos in oneself to be able to give birth to a dancing star. I say unto you: you still have chaos in yourselves."

― Friedrich Nietzsche, Thus Spoke Zarathustra

Chaos is necessary for life; if we automate every process, I think we no longer have dancing stars. The question for me becomes, is the process of meal selection and food one that should contain a little chaos to create the dancing stars, or does automating your food selection for optimal health allow your chaotic self to dance more freely in the places you were meant to dance which may help the star emerge?

All of this does bring to the surface a question that I think we will need to answer if we get to a world where AI can perform nearly all intelligence tasks that we can:

What does it mean to be human?

A question I will explore another day :)

I think that answer to the question posed in the title of this section is the following:

If varying your food selection and sometimes eating tasty food is sustenance for your inner creative self, then you should indulge that part of you. If automating your food away allows your dancing star to flourish, then that's a good route to go.

The Future of Humanity

Whether Bryan's diet is correct for everyone or not, the philosophy is certainly interesting. I don't know that following the same diet daily for the rest of your life is the answer.

If I think about my life, the more I automate, the more time I have to think about other things. What happens when AGI automates nearly everything humans do and care about now? What does the structure of humanity look like, and how does the human experience evolve with this coming superintelligence?

I don't know the answer to this, but I think that together, we can aim to automate the menial things of our lives in a way that allows us to think about things that are more meaningful and important to us, and as we start to automate more and more things, we will come to be enlightened by the things that are truly important to us as a species, and we will inevitably get closer to finding out what it means to be a human.

The best thing you can do to be a part of this journey together is to subscribe to this newsletter and share it with someone who you think would also enjoy exploring the Frontier of tech, business, philosophy, and culture together so we don't have to do it alone!

Thanks for reading :)

Until next time - take care, everyone!